A recent book, “Noise: A Flaw in Human Judgment Kindle Edition” by Daniel Kahneman, speaks to the overwhelming role “noise” plays in the decision-making process. Research has shown stunning results that indicate we are not as consistent or unbiased in making decisions as we thought we were. To quote Kahneman, we have evidence that “the same doctor, the same judge, the same interviewer, or the same customer service agent will make different decisions depending on whether it is morning or afternoon, or Monday rather than Wednesday. These are examples of noise: variability in judgments that should be identical.”

The book has excellent advice on reducing noise, showing that “with a few simple remedies, people can reduce noise and bias and make far better decisions.” The ways to deal with noise reduction depend on context, goals, and people. And, the fact is, while it may not be surprising to learn what one person thinks is 5-stars may be 4-stars for someone else, it may be surprising to know that even the same person can change their scale within a short amount of time due entirely to external factors.

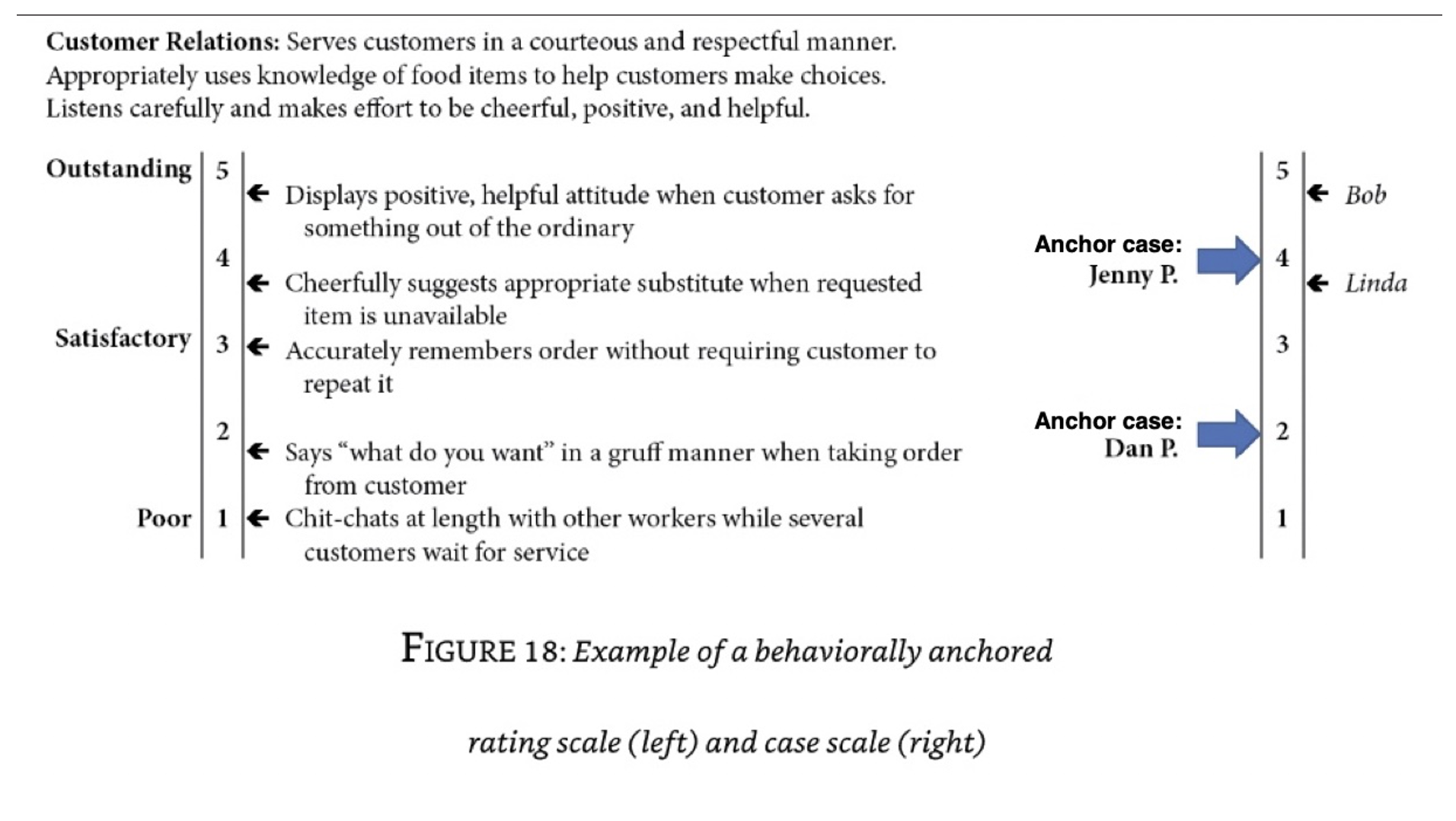

Specifically, for market researchers, the book shows an example of rating scale questions and how survey design can reduce the variability in judgments that should be identical. For example, market researchers will often ask questions in surveys about ratings. Research and evidence indicate that when asking scale questions, researchers should use “anchor cases,” together with “frame-of-reference training,” to design questions with responses that allow the respondent to compare their findings with those “anchor cases.” Using this model “provides demonstrably less noisy, and more accurate ratings.” An example of this is in Figure 18 below:

The challenge is that designing questionnaires this way requires customization which in turn takes time. Many out-of-the-box survey software tools make it challenging or even impossible to use “anchor cases” within rating scales, further exacerbating the noise problems for market research analysts and users of the insights. Using anchor case examples requires thought, consideration, and even conversation to identify the best examples that make sense and are easily understood by respondents.

Next time you are considering asking a rating scale question, consider using specific anchor case examples to provide context for your respondents, rather than giving anchors based solely on behavior.